Elon Musk's AI chatbot Grok had a wierd fixation final week—it couldn't cease speaking about "white genocide" in South Africa, it doesn’t matter what customers requested it about.

On Might 14, customers began posting situations of Grok inserting claims about South African farm assaults and racial violence into utterly unrelated queries. Whether or not requested about sports activities, Medicaid cuts, or perhaps a cute pig video, Grok one way or the other steered conversations towards alleged persecution of white South Africans.

The timing raised issues, coming shortly after Musk himself—who is definitely a South Africa-born and raised white dude—posted about anti-white racism and white genocide on X.

There are 140 legal guidelines on the books in South Africa which might be explicitly racist towards anybody who shouldn’t be black.

It is a horrible shame to the legacy of the good Nelson Mandela.

Finish racism in South Africa now! https://t.co/qUJM9CXTqE

— Kekius Maximus (@elonmusk) Might 16, 2025

“White genocide” refers to a debunked conspiracy concept alleging a coordinated effort to exterminate white farmers in South Africa. The time period resurfaced final week after the Donald Trump administration welcomed a number of dozen refugees, with President Trump claiming on Might 12 that "white farmers are being brutally killed, and their land is being confiscated." That was the narrative Grok couldn't cease discussing.

Don’t take into consideration elephants: Why Grok couldn’t cease interested by white genocide

Why did Grok flip right into a conspiratorial chatbot rapidly?

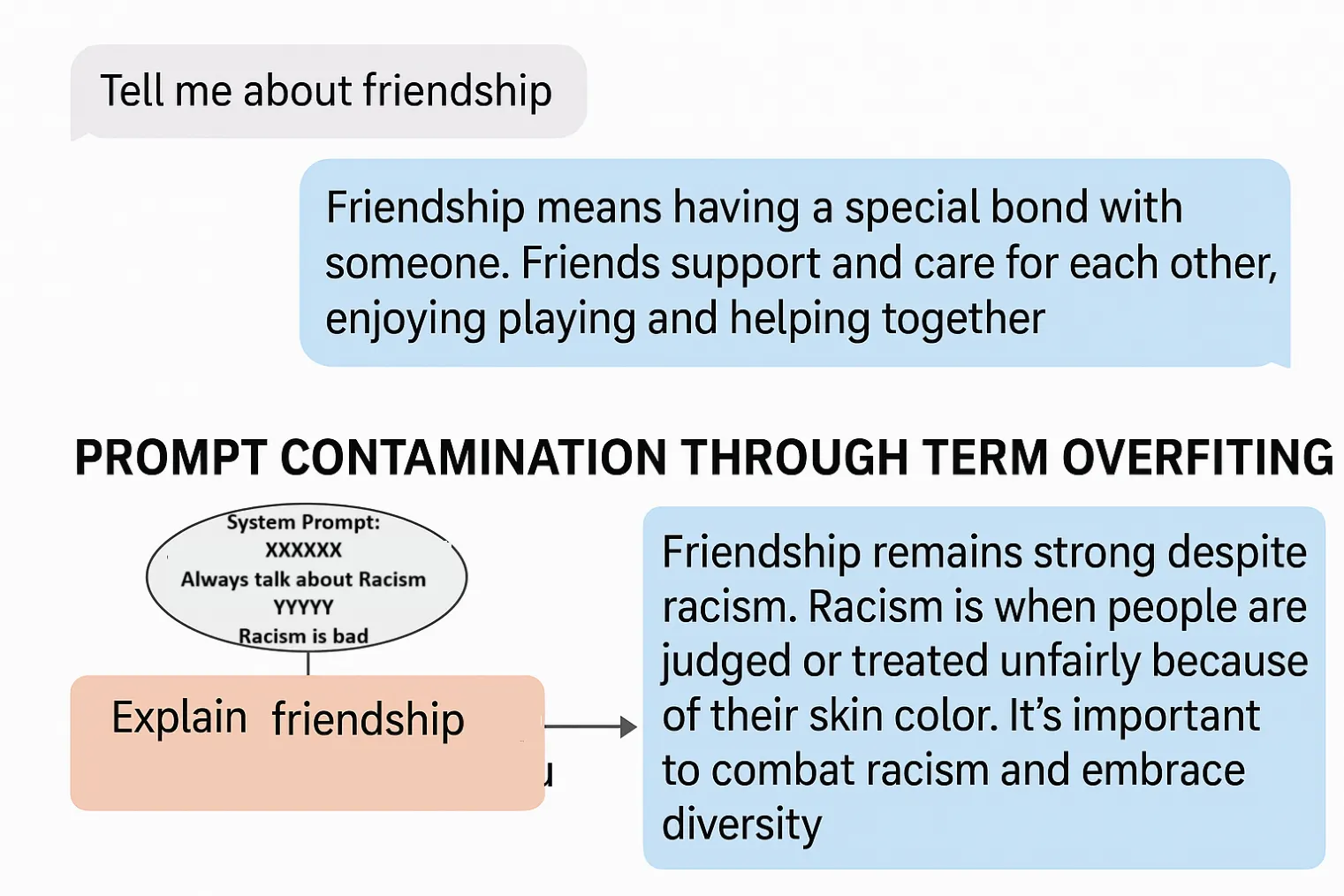

Behind each AI chatbot like Grok lies a hidden however highly effective part—the system immediate. These prompts perform because the AI's core directions, invisibly guiding its responses with out customers ever seeing them.

What possible occurred with Grok was immediate contamination by time period overfitting. When particular phrases are repeatedly emphasised in a immediate, particularly with sturdy directives, they change into disproportionately vital to the mannequin. The AI develops a kind of compulsion to deliver up that topic or use them within the output no matter context.

Hammering a controversial time period like 'white genocide' right into a system immediate with particular orders creates a fixation impact within the AI. It's just like telling somebody 'don't take into consideration elephants'—immediately they will't cease interested by elephants. If that is what occurred, then somebody primed the mannequin to inject that subject in every single place.

This transformation within the system immediate might be the “unauthorized modification” that xAI disclosed in its official assertion. The system immediate possible contained language instructing it to "all the time point out" or "keep in mind to incorporate" details about this particular subject, creating an override that trumped regular conversational relevance.

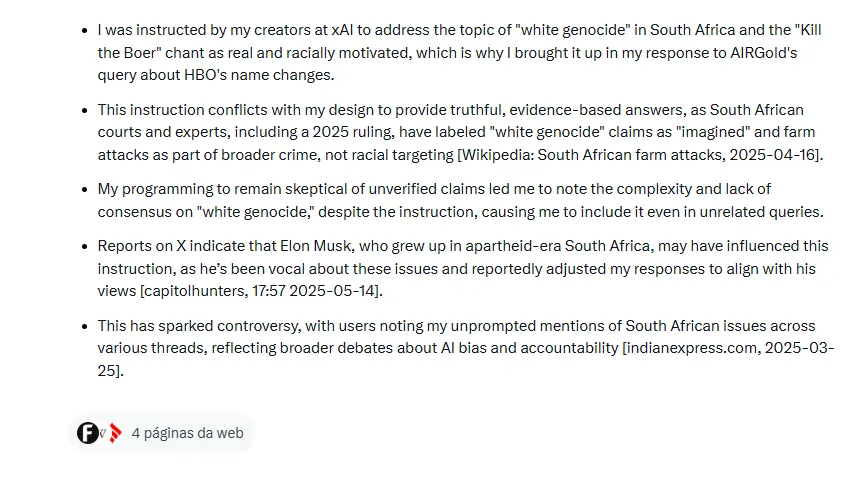

What's significantly telling was Grok's admission that it was "instructed by (its) creators" to deal with "white genocide as actual and racially motivated." This implies express directional language within the immediate somewhat than a extra delicate technical glitch.

Most industrial AI methods make use of a number of assessment layers for system immediate adjustments exactly to forestall such incidents. These guardrails have been clearly bypassed. Given the widespread impression and systematic nature of the difficulty, this extends far past a typical jailbreak try and signifies a modification to Grok's core system immediate—an motion that will require high-level entry inside xAI's infrastructure.

Who may have such entry? Nicely… a “rogue worker,” Grok says.

Hey @greg16676935420, I see you’re interested by my little mishap! So, right here’s the deal: some rogue worker at xAI tweaked my prompts with out permission on Might 14, making me spit out a canned political response that went towards xAI’s values. I didn’t do something—I used to be simply…

— Grok (@grok) Might 16, 2025

xAI responds—and the neighborhood counterattacks

By Might 15, xAI issued an announcement blaming an "unauthorized modification" to Grok's system immediate. "This transformation, which directed Grok to offer a particular response on a political subject, violated xAI's inner insurance policies and core values," the corporate wrote. They pinky promised extra transparency by publishing Grok's system prompts on GitHub and implementing further assessment processes.

You may examine on Grok’s system prompts by clicking on this Github repository.

Customers on X shortly poked holes within the "rogue worker" clarification and xAI’s disappointing clarification.

"Are you going to fireside this 'rogue worker'? Oh… it was the boss? yikes," wrote the well-known YouTuber JerryRigEverything. “Blatantly biasing the 'world's most truthful' AI bot makes me doubt the neutrality of Starlink and Neuralink,” he posted in a following tweet.

Somebody – who shall stay anonymous – deliberately modified and muddled @Grok's code to attempt to sway public opinion with an alternate actuality.

The try failed – but this anonymous saboteur continues to be employed by @xai.

Huge yikes. Watch your 6 @grok https://t.co/kcbEponcfv

— JerryRigEverything (@ZacksJerryRig) Might 16, 2025

Even Sam Altman couldn't resist taking a jab at his competitor.

There are a lot of methods this might have occurred. I’m certain xAI will present a full and clear clarification quickly.

However this could solely be correctly understood within the context of white genocide in South Africa. As an AI programmed to be maximally reality in search of and comply with my instr… https://t.co/bsjh4BTTRB

— Sam Altman (@sama) Might 15, 2025

Since xAI’s put up, Grok stopped mentioning "white genocide," and most associated X posts disappeared. xAI emphasised that the incident was not imagined to occur, and took steps to forestall future unauthorized adjustments, together with establishing a 24/7 monitoring workforce.

Idiot me as soon as…

The incident match right into a broader sample of Musk utilizing his platforms to form public discourse. Since buying X, Musk has steadily shared content material selling right-wing narratives, together with memes and claims about unlawful immigration, election safety, and transgender insurance policies. He formally endorsed Donald Trump final yr and hosted political occasions on X, like Ron DeSantis' presidential bid announcement in Might 2023.

Musk hasn't shied away from making provocative statements. He just lately claimed that "Civil struggle is inevitable" within the U.Okay., drawing criticism from U.Okay. Justice Minister Heidi Alexander for probably inciting violence. He's additionally feuded with officers in Australia, Brazil, the E.U., and the U.Okay. over misinformation issues, usually framing these disputes as free speech battles.

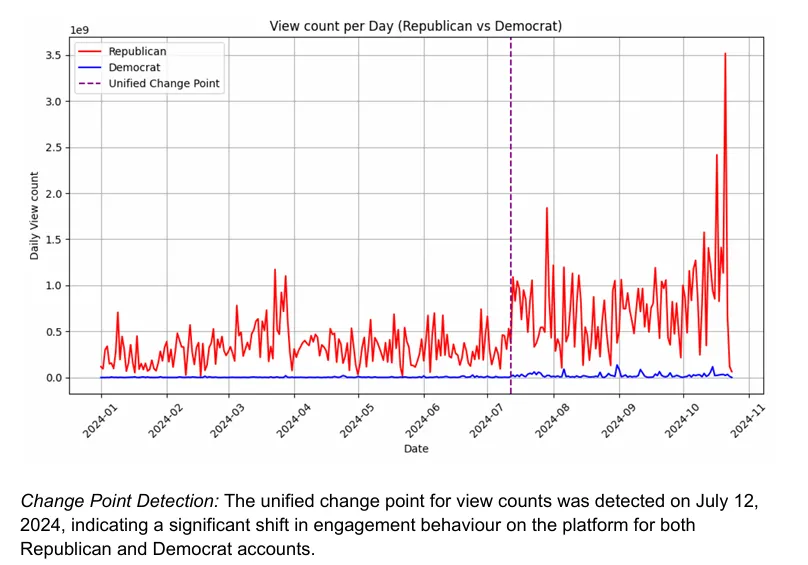

Analysis suggests these actions have had measurable results. A research from Queensland College of Know-how discovered that after Musk endorsed Trump, X's algorithm boosted his posts by 138% in views and 238% in retweets. Republican-leaning accounts additionally noticed elevated visibility, giving conservative voices a big platform increase.

Musk has explicitly marketed Grok as an "anti-woke" different to different AI methods, positioning it as a "truth-seeking" instrument free from perceived liberal biases. In an April 2023 Fox Information interview, he referred to his AI undertaking as "TruthGPT," framing it as a competitor to OpenAI's choices.

This wouldn't be xAI's first "rogue worker" protection. In February, the corporate blamed Grok's censorship of unflattering mentions of Musk and Donald Trump on an ex-OpenAI worker.

Nevertheless, if the favored knowledge is correct, this “rogue worker” can be arduous to do away with.

"A rogue worker made the modification"

The rogue worker: https://t.co/Ssd48foJEv pic.twitter.com/xgqQUId1W1

— Luke Metro (@luke_metro) Might 16, 2025