October 22, 2025

The GIST AI model accurately renders garment motions of avatars

Sadie Harley

scientific editor

Robert Egan

associate editor

Editors' notes

This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

preprint

trusted source

proofread

The era has begun where AI moves beyond merely "plausibly drawing" to understanding even why clothes flutter and wrinkles form.

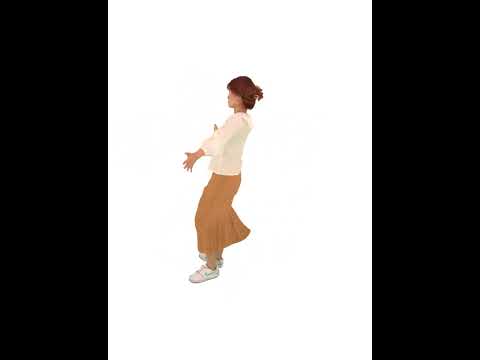

A KAIST research team has developed a new generative AI that learns movement and interaction in 3D space following physical laws. This technology, which overcomes the limitations of existing 2D-based video AI, is expected to enhance the realism of avatars in films, the metaverse, and games, and significantly reduce the need for motion capture or manual 3D graphics work.

The work is published on the arXiv preprint server.

A research team led by Professor Tae-Kyun (T-K) Kim from the School of Computing has developed "MPMAvatar," a spatial and physics-based generative AI model that overcomes the limitations of existing 2D, pixel-based video generation technology.

To solve the problems of conventional 2D technology, the research team proposed a new method that reconstructs multi-view images into 3D space using Gaussian Splatting and combines it with the Material Point Method (MPM), a physics simulation technique.

In other words, the AI was trained to learn physical laws on its own by stereoscopically reconstructing videos taken from multiple viewpoints and allowing objects within that space to move and interact as if they were in the real physical world.

This enables the AI to compute the movement based on objects' material, shape, and external forces, and then learn the physical laws by comparing the results with actual videos.

The research team represented the 3D space using point-units, and by applying both Gaussian and MPM to each point, they simultaneously achieved physically natural movement and realistic video rendering.

That is, they divided the 3D space into numerous small points, making each point move and deform like a real object, thereby realizing natural video that is nearly indistinguishable from reality.

In particular, to precisely express the interaction of thin and complex objects like clothing, they calculated both the object's surface (mesh) and its particle-unit structure (point), and utilized the Material Point Method (MPM), which calculates the object's movement and deformation in 3D space according to physical laws.

Furthermore, they developed a new collision handling technology to realistically reproduce scenes where clothes or objects move and collide with each other in multiple spots and in a complex manner.

The generative AI model MPMAvatar, to which this technology is applied, successfully reproduced the realistic movement and interaction of a person wearing loose clothing, and also succeeded in zero-shot generation, where the AI processes data it has never seen during the learning process by inferring on its own.

The proposed method is applicable to various physical properties, such as rigid bodies, deformable objects, and fluids, allowing it to be used not only for avatars but also for the generation of general complex scenes.

Professor Tae-Kyun (T-K) Kim explained, "This technology goes beyond AI simply drawing a picture; it makes the AI understand 'why' the world in front of it looks the way it does. This research demonstrates the potential of 'Physical AI' that understands and predicts physical laws, marking an important turning point toward AGI (Artificial General Intelligence)."

He adds, "It is expected to be practically applied across the broader immersive content industry, including virtual production, films, short-form content, and adverts, creating significant change."

The research team is currently expanding this technology to develop a model that can generate physically consistent 3D videos simply from a user's text input.

More information: Changmin Lee et al, MPMAvatar: Learning 3D Gaussian Avatars with Accurate and Robust Physics-Based Dynamics, arXiv (2025). DOI: 10.48550/arxiv.2510.01619

Journal information: arXiv Provided by The Korea Advanced Institute of Science and Technology (KAIST) Citation: AI model accurately renders garment motions of avatars (2025, October 22) retrieved 22 October 2025 from https://techxplore.com/news/2025-10-ai-accurately-garment-motions-avatars.html This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without the written permission. The content is provided for information purposes only.

Explore further

AI technology reconstructs 3D hand-object interactions from video, even when elements are obscured

Feedback to editors